The most exciting and innovative research currently being conducted relies on a slightly less exciting demand for methodological standardization. Why are standard methods so important? Allow us to explain.

The Human Genome Project, which began in 1990 and was finished in 2003, ushered in a new era of collaborative scientific efforts. During this truly monumental project hundreds of scientists from the United States, Japan, Germany, China, Canada, and the United Kingdom successfully sequenced and compiled all 3.3 billion base-pairs in the human set of genetic instructions. Over 92% of the sequence exceeded 99.99% accuracy.

In order to achieve this incredible accuracy, it was critical that there be an established battery of methodologies to be followed by every team contributing sequence data to the project.

During the planning stages of the project, The National Research Council’s Committee on Mapping and Sequencing the Human Genome stated, “A mechanism of quality control is needed for the groups that are contributing sequencing information.” Such an immensely collaborative project was only possible through the standardization of sequencing techniques, laboratory sterilization procedures, data annotation, and eventual bioinformatics based processing and compilation of data.

The success of The Human Genome Project, along with the advent of technologies capable of producing immense amounts of data, has forever changed the scale of the questions scientists can ask. In addition, the Internet has made the world a smaller place, allowing the data generated by such projects to be shared and analyzed in real time. The implementation of such mega-projects is becoming particularly relevant in the field of neuroscience, as they explore the most complex object in the universe: the human brain.

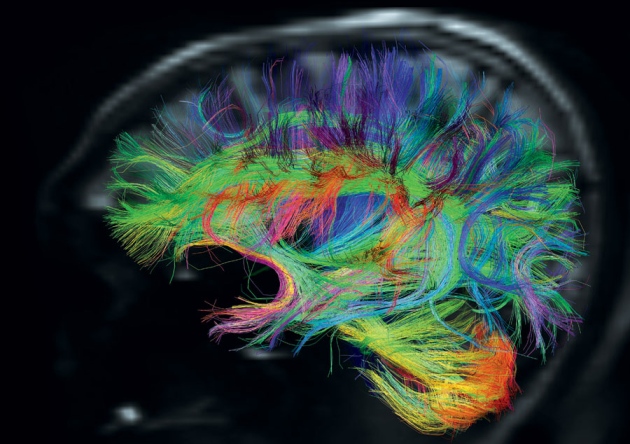

The most ambitious of such projects is the development of a “connectome,” a comprehensive map of all neurons and connections in the human brain. The development of a connectome (a term directly inspired by the word genome) is still in its infancy, but it is clear that methodological standardization is crucial to the project’s success. The project will gather data at both cellular and tissue scales using a variety of techniques, all of which must be carried out in a uniform fashion.

When asked about the importance of standardization in the current large-scale neuroscience projects, Christof Koch, Chief Science Officer at the Allen Brain Institute, said the following:

“Worrying about standards sounds like an unsexy activity, but once you have a standard then you can greatly accelerate progress. If you go back in history, standardization has often been the driver of key industrial innovations…”

On a cellular level, neural pathways will be mapped using histological dissection, staining, degeneration methods, two photon imaging, electron microscopy, and axonal tracing. This data will be used in conjunction with data collected on a more macroscopic scale using non-invasive imaging technologies such as diffusion magnetic resonance imaging and functional magnetic resonance imaging. All of these techniques have variations and subtly different manners of execution; however, through standardization, all the scientists contributing to the connectome project can successfully come together and work as a force for innovation.